Ethan Mollick believes that the emergence of Gemini 3 signifies the rise of 'agent models' with autonomous action capabilities. Although not flawless, its errors are no longer fabricated 'hallucinations' but more akin to human deviations in judgment or intent understanding. As AI capabilities improve, human-machine collaboration is evolving from 'humans fixing AI errors' to 'humans guiding AI work'.

Google's latest released Gemini 3 model marks a key turning point in the artificial intelligence field.

Wall Street Insights previously mentioned, on Tuesday, November 18 Eastern Time, Google officially released the highly anticipated Gemini 3, its most powerful AI model to date, which went live on the first day of release in Google Search, the Gemini app, and multiple developer platforms, put into use across multiple revenue-generating products.

Google executives emphasized at the press conference that Gemini 3 leads on several popular industry benchmarks for measuring AI model performance. Demis Hassabis, CEO of Google AI research lab DeepMind, stated that Gemini 3 is the "world's best multimodal understanding model" and the company's most powerful agent and code generation model to date.

According to a deep evaluation by Wharton School professor Ethan Mollick, the release of Gemini 3 and its companion tool "Antigravity" demonstrates astonishing "agent" capabilities. Compared to the GPT-3 model three years ago, AI is no longer just generating text but can write code, build interactive applications, and execute multi-step tasks.

Mollick points out that this leap from "description" to "action" means AI is evolving from a conversational partner to a general-purpose tool that can access computers and complete real work.

Mollick's conclusion is that we are moving from the 'chatbot era' to the 'digital colleague era'. Gemini 3, though not perfect, has errors that are no longer baseless 'hallucinations' but more like human deviations in judgment or intent understanding.

This indicates that the mode of human-machine collaboration is changing; humans will no longer be correcting AI's low-level errors but providing high-level strategic guidance and direction. This may be the biggest change in the AI field since the release of ChatGPT.

The following is the full text of Ethan Mollick's evaluation article:

Three Years, from GPT-3 to Gemini 3

From Chatbots to Agents

Ethan Mollick

November 19, 2025

I've been testing Google's newly released Gemini 3 model. It's outstanding. But instead of listing benchmark numbers, I'll let the AI demonstrate how far we've come in these three years— that's exactly what I did.

It's been nearly three years since ChatGPT was released. In the first few days after its launch, I wrote my first Substack article about OpenAI's earlier GPT-3. Then ChatGPT burst onto the scene, and I immediately wrote another: "I'm usually cautious about tech predictions, but this time it will change our world faster and more dramatically than expected. It's no longer just automating repetitive, dangerous jobs; the first to be disrupted by AI may be more analytical, creative, writing, and communication roles."

Today, it turns out I was right.

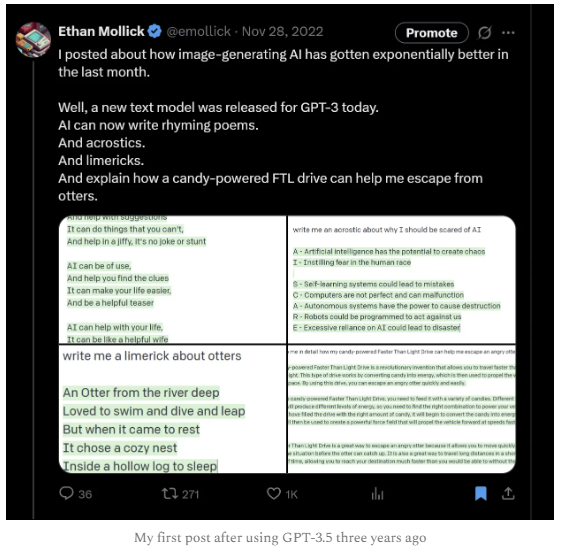

(Illustration: Screenshot of my first article after using GPT-3.5 three years ago)

I could verbally explain the huge gap between the original ChatGPT and today's Gemini 3 released by Google, but there's no need. I threw that screenshot above to Gemini 3 and wrote just one sentence:

Me: "Make something to show everyone how much AI has progressed in these three years."

Gemini: "Sure. This tweet of yours is from November 2022—the eve of the modern AI boom. Back then, the biggest 'wow' was AI writing coherent text or a little poem on a silly topic like 'candy-powered supersonic spaceship escaping otters'.

To demonstrate progress, I'm not just describing in text; I'm directly making an interactive, playable 'Candy-Powered Supersonic Spaceship Simulator' for you.

In 2022, AI could only describe the engine; in 2025, AI can code the engine, draw the interface, and let you pilot the spaceship yourself."

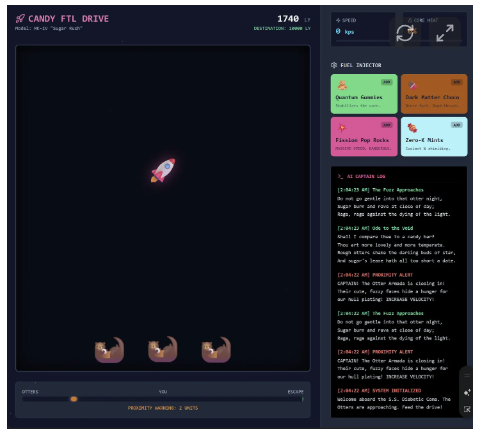

It really made a mini-game: I had to use candy energy to escape pursuing otters, interspersed with little poems and funny updates. Readers of this blog probably already know AI can write code, but more interesting is—what else can Gemini 3 do when you don't treat it as a 'chat box' but as 'something else'.

A Tool That Writes Code, Not Just 'A Code-Writing Tool'

Alongside Gemini 3, Google also released Antigravity. Programmers get it: it's similar to Claude Code and OpenAI Codex, giving it computer permissions to autonomously write programs under guidance.

If you're not a programmer, you might overlook it—I think that's a mistake. 'Can write code' doesn't mean 'be a programmer'; it means 'can do any job that requires a computer'. Thus, the essence of these tools is completely rewritten.

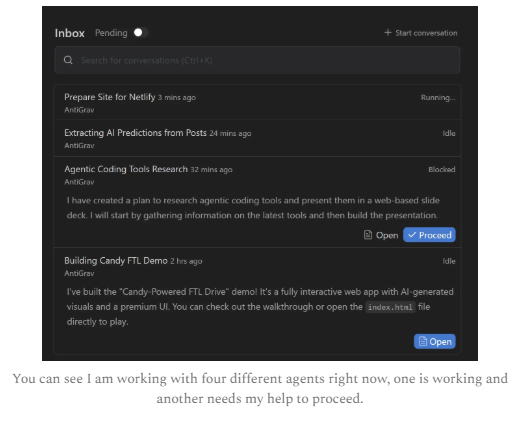

Gemini 3 is extremely good at writing code, which matters even if you don't consider yourself a 'programmer'. A foundational perspective in AI is: anything you do on a computer boils down to code; if AI can write code, it can build dashboards, scrape websites, make PPTs, read files... This makes 'code-writing agents' universal tools. Antigravity productizes this: give me an 'inbox', I assign tasks to agents, and they notify me when they need approval or help.

(Illustration: Four agents running simultaneously, one working, one waiting for my response)

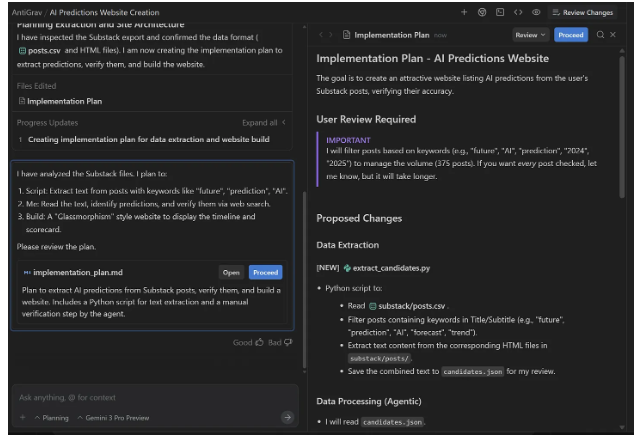

I interact with them in English, no code; they do the work with code for me. Gemini 3 excels at planning, knowing what to do and when to ask. For example, I put all my past newsletter drafts in a folder and instructed:

"Make me a nice webpage summarizing all my AI predictions, then search online to see which ones were right and wrong."

It read all files, ran code, first handed me an editable plan—this was the first time it spoke up, and its understanding accuracy surprised me. I made minor changes and let it go.

Then it searched the web, built the site, took over the browser to check effects, and packaged the final product for me. I gave revision feedback like to a real colleague, and it iterated.

It's not perfect—agents aren't there yet. I didn't find hallucinations, but there were places needing correction, though those errors were more like 'judgment deviations or misunderstandings a human colleague would have', not the wild hallucinations of traditional AI. Importantly, I felt in control of the AI's decisions because it periodically checks and confirms its work, and my process is transparently visible. It felt more like managing a teammate than chatting through an interface.

PhD-Level Intelligence?

Antigravity isn't the only surprise. Another shock is its display of true 'judgment'.

I often complain: AI benchmarks are a mess. Gemini 3 leads most leaderboards (maybe not beating $200 GPT-5 Pro yet, but its 'deep thinking' version might flip that). The industry loves the slogan 'PhD-level intelligence'. I decided to test it for real.

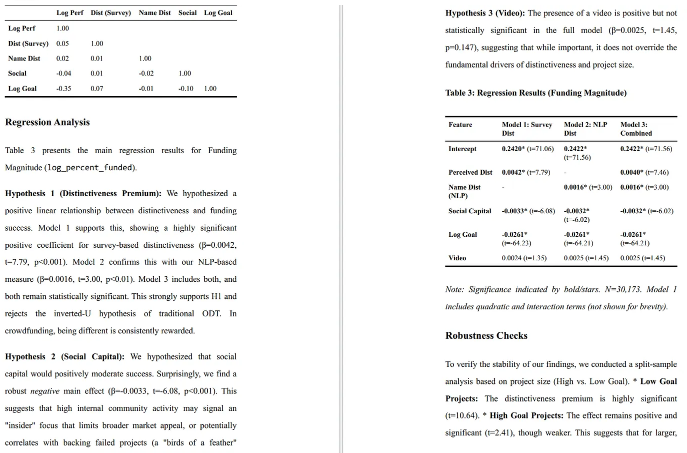

I threw it a bunch of old files from a crowdfunding research project ten years ago—filenames like 'project_final_seriously_this_time_done.xls', still in ancient STATA format. Command was just one sentence:

"Figure out the data structure yourself, clean the STATA files, prepare for new analysis."

It actually recovered corrupted data and mastered the complex setup.

Then I gave it a typical 'second-year PhD student paper' task, no hints:

"Good, now write an original paper using this data. Deeply research the field, elevate the theme to entrepreneurship or strategy theory level, do rigorous stats, format as a journal."

It picked the topic itself, hypothesized, ran stats, made charts, formatted—mastering the hardest 'topic selection and feasibility balance'. I vaguely said 'flesh it out more, improve', and ended up with a 14-page paper.

(Illustration: First two pages of the paper)

Even more impressive, it invented an metric: using NLP to mathematically compare project descriptions with masses of descriptions, measuring 'crowdfunding idea uniqueness'. It wrote the code itself and verified results.

So, is this 'PhD-level'?

If you mean 'can do the work of a competent grad student at a top university', partially yes. But it has grad student flaws: stats methods have gaps, theory jumps too far, evidence chain not rigorous enough... We've passed the 'hallucination' phase into subtler, more 'human' flaw territory. Interestingly, when I gave open suggestions like guiding a student ('add more crowdfunding literature to establish methods'), it improved significantly—maybe with more guidance, it approaches 'PhD'.

What is Gemini 3?

It's an excellent 'thinking + execution' partner callable by billions worldwide; also a mirror reflecting trends like AI not slowing, agents rising, humans needing to manage 'smart AI'.

Three years ago, we marveled at 'machines writing otter poems'; less than 1000 days later, I'm debating stats methods with an agent that built its own research environment.

The chatbot era is giving way to the 'digital colleague' era.

Yes, Gemini 3 isn't perfect, needs humans who can direct and verify as 'managers'. But 'humans in the loop' is shifting from 'humans cleaning up AI messes' to 'humans guiding AI work'—perhaps the biggest paradigm shift since ChatGPT.

Easter egg:

I told Gemini 'purely code a Substack cover image for me, first check dimensions', it searched specs online first, then purely mathematically drew it, one-stop done.

Obligatory warning:

Giving AI agents computer permissions has risks—they might move/delete files or leak docs without asking. It'll be better when tools target non-programmers; be cautious now.